Graduate Student, University of Illinois at Urbana-Champaign

Graduate Student, University of Illinois at Urbana-Champaign Undergraduate Student, Tsinghua University

Undergraduate Student, Tsinghua UniversityHi, there! I'm Zhe Wang, a second year M.S. student at University of Illinois at Urbana-Champaign, majoring in Computer Science. I am advised by Prof. Lingming Zhang. Before coming to UIUC, I completed my undergraduate study at Tsinghua University, majoring in Mathematics and Physics.

Research Interests

My research interest lies in the intersection of Artificial Intelligence and Software Engineering. More specifically:

- LLMs for Code: to develop LLMs for code through post-training via reasoning [PurpCode] and data-centric alignment [Magicoder]

- AI for Cybersecurity: to enhance the trustworthiness, reliability and security of software systems against cyberactivity attacks [PurpCode]

- Agents for Software Engineering: to empower LLM agents with the capability of collaborating [MultiAgentBench] and self-evolving [Live-SWE-agent] for real-world software engineering tasks

I am currently seeking for an Ph.D. position starting from Fall 2026. Feel free to drop me an email if you are interested in my research or have any questions. Please find my detailed Curriculum Vitae below:

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Selected Publications (view all )

Live-SWE-agent: Can Software Engineering Agents Self-Evolve on the Fly?

Chunqiu Steven Xia, Zhe Wang, Yan Yang, Yuxiang Wei, Lingming Zhang

🚀 Top-1 Open-Source Agent Performance on SWE-bench Verified

In this paper, we propose Live-SWE-agent, the first live software agent that can autonomously and continuously evolve itself on-the-fly during runtime when solving real-world software problems. More specifically, Live-SWE-agent starts with the most basic agent scaffold with only access to bash tools (e.g., mini-SWE-agent), and autonomously evolves its own scaffold implementation while solving real-world software problems. Our evaluation on the widely studied SWE-bench Verified benchmark shows that Live-SWE-agent can achieve an impressive solve rate of 75.4% without test-time scaling, outperforming all existing open-source software agents and approaching the performance of the best proprietary solution. Moreover, Live-SWE-agent outperforms state-of-the-art manually crafted software agents on the recent SWE-Bench Pro benchmark, achieving the best-known solve rate of 45.8%.

Live-SWE-agent: Can Software Engineering Agents Self-Evolve on the Fly?

Chunqiu Steven Xia, Zhe Wang, Yan Yang, Yuxiang Wei, Lingming Zhang

🚀 Top-1 Open-Source Agent Performance on SWE-bench Verified

In this paper, we propose Live-SWE-agent, the first live software agent that can autonomously and continuously evolve itself on-the-fly during runtime when solving real-world software problems. More specifically, Live-SWE-agent starts with the most basic agent scaffold with only access to bash tools (e.g., mini-SWE-agent), and autonomously evolves its own scaffold implementation while solving real-world software problems. Our evaluation on the widely studied SWE-bench Verified benchmark shows that Live-SWE-agent can achieve an impressive solve rate of 75.4% without test-time scaling, outperforming all existing open-source software agents and approaching the performance of the best proprietary solution. Moreover, Live-SWE-agent outperforms state-of-the-art manually crafted software agents on the recent SWE-Bench Pro benchmark, achieving the best-known solve rate of 45.8%.

PurpCode: Reasoning for Safer Code Generation

Jiawei Liu*, Nirav Diwan*, Zhe Wang*, Haoyu Zhai, Xiaona Zhou, Kiet A. Nguyen, Tianjiao Yu, Muntasir Wahed, Yinlin Deng, Hadjer Benkraouda, Yuxiang Wei, Lingming Zhang, Ismini Lourentzou, Gang Wang (* equal contribution)

🥇 1st Place in Amazon Nova AI Challenge 2025 ($250,000)

NeurIPS 2025

We introduce PurpCode, the first post-training recipe for training safe code reasoning models towards generating secure code and defending against malicious cyberactivities. PurpCode trains a reasoning model in two stages: (i) Rule Learning, which explicitly teaches the model to reference cybersafety rules to generate vulnerability-free code and to avoid facilitating malicious cyberactivities; and (ii) Reinforcement Learning, which optimizes model safety and preserves model utility through diverse, multi-objective reward mechanisms.

PurpCode: Reasoning for Safer Code Generation

Jiawei Liu*, Nirav Diwan*, Zhe Wang*, Haoyu Zhai, Xiaona Zhou, Kiet A. Nguyen, Tianjiao Yu, Muntasir Wahed, Yinlin Deng, Hadjer Benkraouda, Yuxiang Wei, Lingming Zhang, Ismini Lourentzou, Gang Wang (* equal contribution)

🥇 1st Place in Amazon Nova AI Challenge 2025 ($250,000)

NeurIPS 2025

We introduce PurpCode, the first post-training recipe for training safe code reasoning models towards generating secure code and defending against malicious cyberactivities. PurpCode trains a reasoning model in two stages: (i) Rule Learning, which explicitly teaches the model to reference cybersafety rules to generate vulnerability-free code and to avoid facilitating malicious cyberactivities; and (ii) Reinforcement Learning, which optimizes model safety and preserves model utility through diverse, multi-objective reward mechanisms.

MultiAgentBench: Evaluating the Collaboration and Competition of LLM agents

Kunlun Zhu*, Hongyi Du*, Zhaochen Hong*, Xiaocheng Yang*, Shuyi Guo*, Zhe Wang*, Zhenhailong Wang, Cheng Qian, Xiangru Tang, Heng Ji, Jiaxuan You (* equal contribution)

ACL 2025 Main

In this paper, we introduce MultiAgentBench, a comprehensive benchmark designed to evaluate LLM-based multi-agent systems across diverse, interactive scenarios. Our framework measures not only task completion but also the quality of collaboration and competition using novel, milestone-based key performance indicators.

MultiAgentBench: Evaluating the Collaboration and Competition of LLM agents

Kunlun Zhu*, Hongyi Du*, Zhaochen Hong*, Xiaocheng Yang*, Shuyi Guo*, Zhe Wang*, Zhenhailong Wang, Cheng Qian, Xiangru Tang, Heng Ji, Jiaxuan You (* equal contribution)

ACL 2025 Main

In this paper, we introduce MultiAgentBench, a comprehensive benchmark designed to evaluate LLM-based multi-agent systems across diverse, interactive scenarios. Our framework measures not only task completion but also the quality of collaboration and competition using novel, milestone-based key performance indicators.

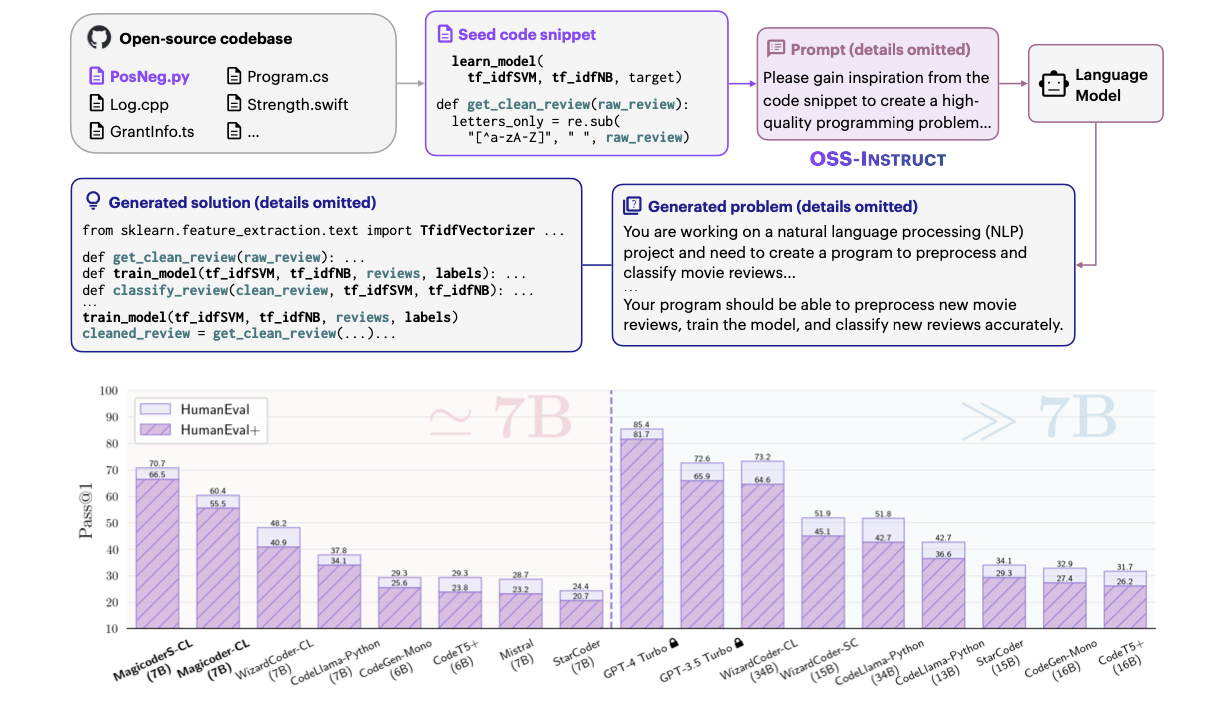

Magicoder: Empowering Code Generation with OSS-Instruct

Yuxiang Wei, Zhe Wang, Jiawei Liu, Yifeng Ding, Lingming Zhang

ICML 2024

In this paper, we introduce Magicoder, a series of fully open-source (code, weights, and data) Large Language Models (LLMs) for code that significantly closes the gap with top code models while having no more than 7B parameters.

Magicoder: Empowering Code Generation with OSS-Instruct

Yuxiang Wei, Zhe Wang, Jiawei Liu, Yifeng Ding, Lingming Zhang

ICML 2024

In this paper, we introduce Magicoder, a series of fully open-source (code, weights, and data) Large Language Models (LLMs) for code that significantly closes the gap with top code models while having no more than 7B parameters.

All publications

Education

-

University of Illinois at Urbana-ChampaignM.S. in Computer ScienceAug. 2024 - Present

University of Illinois at Urbana-ChampaignM.S. in Computer ScienceAug. 2024 - Present -

Tsinghua UniversityB.S. in Mathematics and PhysicsSep. 2020 - Jul. 2024

Tsinghua UniversityB.S. in Mathematics and PhysicsSep. 2020 - Jul. 2024 -

Shanghai High SchoolHigh SchoolSep. 2017 - Jun. 2020

Shanghai High SchoolHigh SchoolSep. 2017 - Jun. 2020